High-speed broadband (500 Mbps, 1 Gbps, and beyond) has transformed how businesses work. But it has also introduced a problem many IT teams don’t notice until users start complaining:

Bufferbloat.

It is the reason you can have a "fast" fiber line and still get robotic VoIP audio, choppy Teams calls, and frustrated users saying "the internet is terrible."

And the worst part? Your ISP speed tests will look perfect.

What is Bufferbloat? (The Traffic Jam in the Fast Lane)

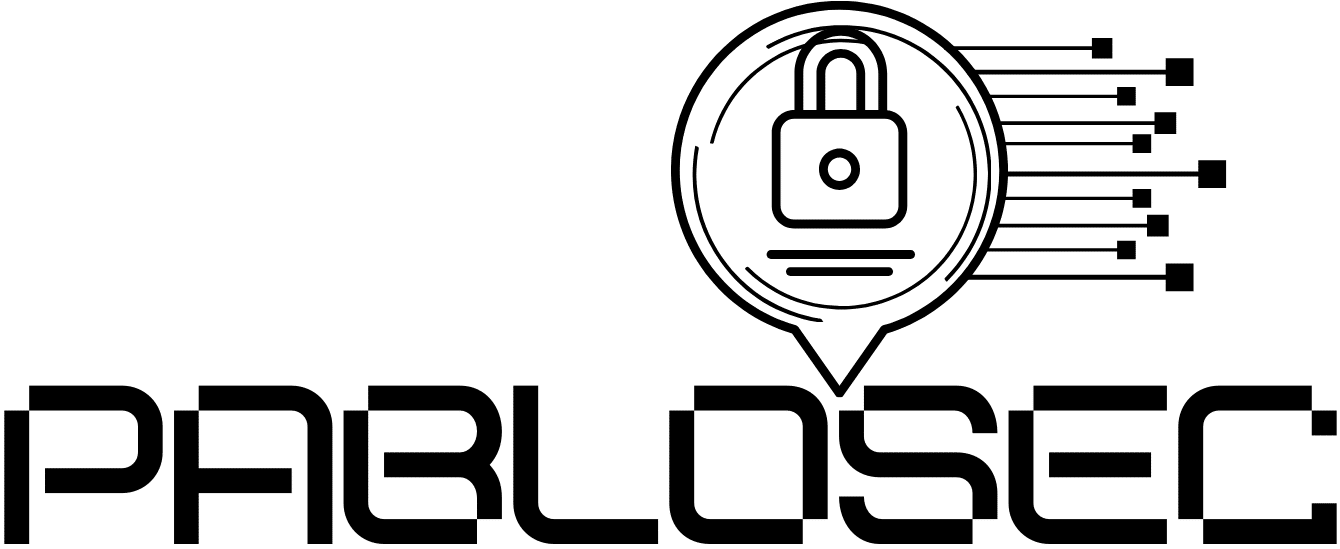

Bufferbloat is high latency that occurs exactly when the connection is busy.

Network devices (routers and firewalls) have "buffers" think of them as waiting rooms for data packets.

This sounds helpful, but in reality, it causes a hidden cost:

-

The Problem: Your urgent VoIP packet (which needs to get through now) gets stuck in line behind 5,000 packets of a non-urgent file upload.

-

The Result: Latency spikes from 20ms to 200ms+. The download finishes fast, but the video call freezes.

Why "Throughput" is a Vanity Metric

In the firewall industry, marketing focuses on Throughput (Gbps). But for a user sitting at a desk, the metrics that actually define "speed" are Latency and Jitter.

A firewall that can push 10 Gbps of raw traffic but has poor queue management will feel "slower" to a user than a 100 Mbps firewall that prioritizes traffic intelligently.

In business terms:

-

Throughput = Moving files.

-

Latency Stability = Productivity.

The Firewall’s Role: Raw Power (ASIC/NPU) vs. Intelligence

This is where hardware selection becomes critical. Modern firewalls (like FortiGate, Palo Alto, etc.) rely on specialized chips called ASICs (Application-Specific Integrated Circuits) or NPUs (Network Processing Units).

These chips are incredible engines designed to offload traffic from the main CPU, allowing the firewall to push massive amounts of data with near-zero latency.

But here is the catch:

Standard hardware offloading is designed to move packets fast, not necessarily to organize them smartly.

The Entry-Level Trap: On cheaper devices, enabling complex "Traffic Shaping" or QoS often forces the traffic off the fast ASIC and onto the slow main CPU. You turn on QoS to fix the lag, but your throughput crashes because the CPU bottlenecks.

The Enterprise Advantage: High-end firewalls with modern NPUs are designed to handle specific shaping tasks in hardware (or have CPUs powerful enough to handle the shaping load without choking).

The Lesson: You don't just need a firewall that can pass 1 Gbps. You need a firewall that can manage 1 Gbps without disabling its hardware acceleration engines.

How to Fix Bufferbloat (The "95% Rule")

The solution is rarely "buy more bandwidth." The solution is Smart Queue Management (SQM) applied at the edge.

To fix it, we have to tell the firewall to take control away from the ISP’s equipment:

Shape Below the Line Rate: We configure your firewall to limit outbound traffic to 90–95% of the actual physical link speed. (e.g., on a 1 Gbps line, we cap it at 950 Mbps).

Why? This ensures the buffer at the ISP side never fills up. The queue remains in your firewall, where we can control it.

Prioritize the Packet, Not the Port: We use the firewall's inspection engine to identify real-time apps (Teams, Zoom, VoIP) and jump them to the front of the line, while bulk data waits its turn.

Final Thought

Bufferbloat is a hidden productivity tax.

If your users complain during peak usage, don’t buy more bandwidth first.

Measure: Latency under load.

Then Fix: Queue management and shaping at the edge.

If you tell us your ISP speed, user count, and critical apps, Pablosec can recommend a firewall sizing and configuration strategy that prioritizes stability because in 2026, consistency beats raw speed.