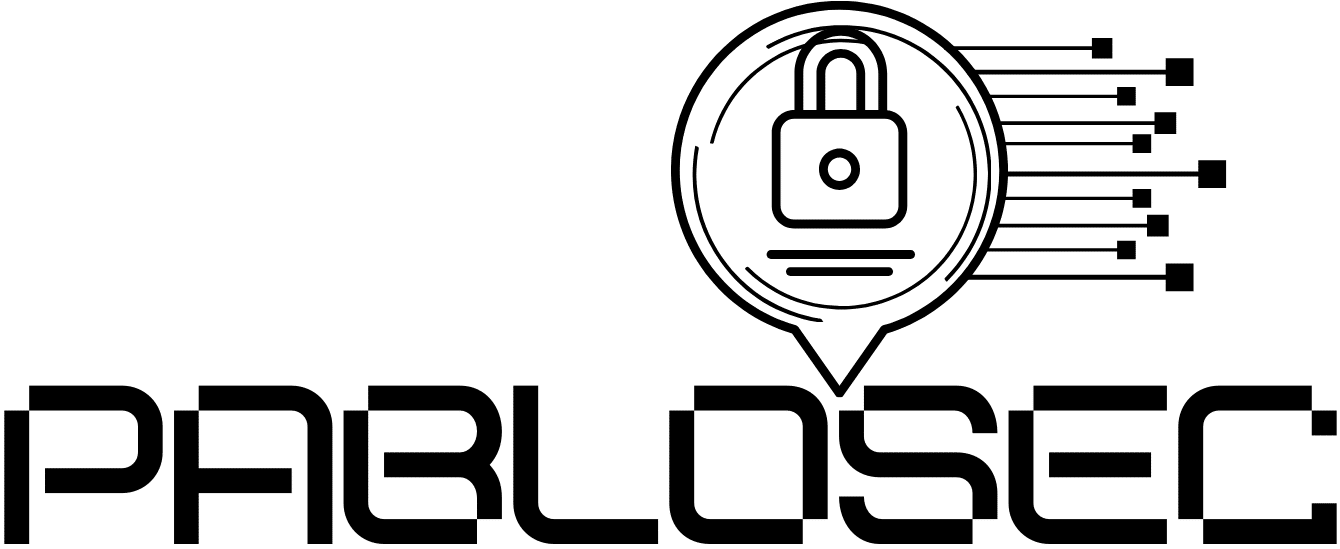

Ethernet has been the default language of networking for decades. It’s everywhere: campus networks, data centres, ISPs, cloud backbones, and enterprise environments. Its success comes from one word: universality. It’s simple enough to deploy at scale, flexible enough to evolve, and open enough to power an entire ecosystem.

But something has changed in the last few years.

AI training clusters and high-performance computing (HPC) have pushed network requirements into a new category. Traditional data centre traffic is mostly “north-south” (clients to servers) and fairly bursty. AI traffic is different: it’s massively parallel, sustained, and extremely sensitive to latency, loss, and jitter at scale.

This is where Ultra Ethernet enters the conversation.

What is Ultra Ethernet?

Ultra Ethernet is an industry effort to make Ethernet perform better for modern, high-performance workloads especially AI and HPC clusters without abandoning the Ethernet ecosystem.

Think of it as:

“Ethernet, redesigned for the extreme demands of AI fabrics.”

The goal is not to invent a completely new networking universe. The goal is to take what the world already runs on Ethernet and push it into the next era.

Why “normal Ethernet” struggles with AI clusters

AI training workloads generate a lot of east-west traffic between GPUs, accelerators, and compute nodes. The network becomes part of the compute system. If the network introduces delays, packet loss, or unpredictable congestion, it doesn’t just slow down traffic it slows down the training job, increases costs, and wastes expensive GPU time.

The biggest pain points are typically:

-

Congestion at scale: many nodes communicating simultaneously

-

Tail latency: the slowest flows can dictate overall job completion time

-

Loss sensitivity: retransmissions can be costly in tightly synchronized workloads

-

Inefficient collective operations: AI training depends on frequent “all-to-all” and “all-reduce” patterns

In short: AI traffic is less forgiving, and the economics are brutal when a GPU cluster is underutilised, you’re burning money.

What Ultra Ethernet is trying to improve

Ultra Ethernet initiatives focus on making Ethernet more suitable for these environments, typically by improving areas like:

-

Better congestion control (more consistent performance under heavy load)

-

Lower and more predictable latency (reducing tail latency)

-

Smarter load balancing across paths (to avoid hotspots)

-

Stronger reliability behaviours for large-scale fabrics

-

Optimisations for AI collective communications patterns

You can think of it as Ethernet catching up to a world where the network is no longer “just connectivity” it’s an active component of compute efficiency.

Is Ultra Ethernet a replacement for Ethernet?

This is the key nuance.

Ultra Ethernet is best understood as a next-generation evolution of Ethernet, not something that makes “classic Ethernet” disappear overnight. Traditional Ethernet will remain the standard for enterprise and general data centre use for a long time because it works well and is deeply embedded.

However, in the specific domain of AI data centres, the direction is clear: networks must become faster, more deterministic, and better at handling synchronized traffic at massive scale.

That’s why Ultra Ethernet is a credible candidate to become the default Ethernet profile for AI fabrics, and potentially the “new normal” for next-generation data centres.

Why the market momentum matters

Networking standards don’t win only because they’re technically good. They win because of ecosystem momentum: vendor support, interoperability, switching silicon roadmaps, tooling, operational maturity, and talent availability.

Ethernet already has that momentum. So the most realistic path forward for AI networking isn’t always “replace Ethernet with something else.” It’s “make Ethernet fit the new workload.”

Ultra Ethernet sits exactly on that path.

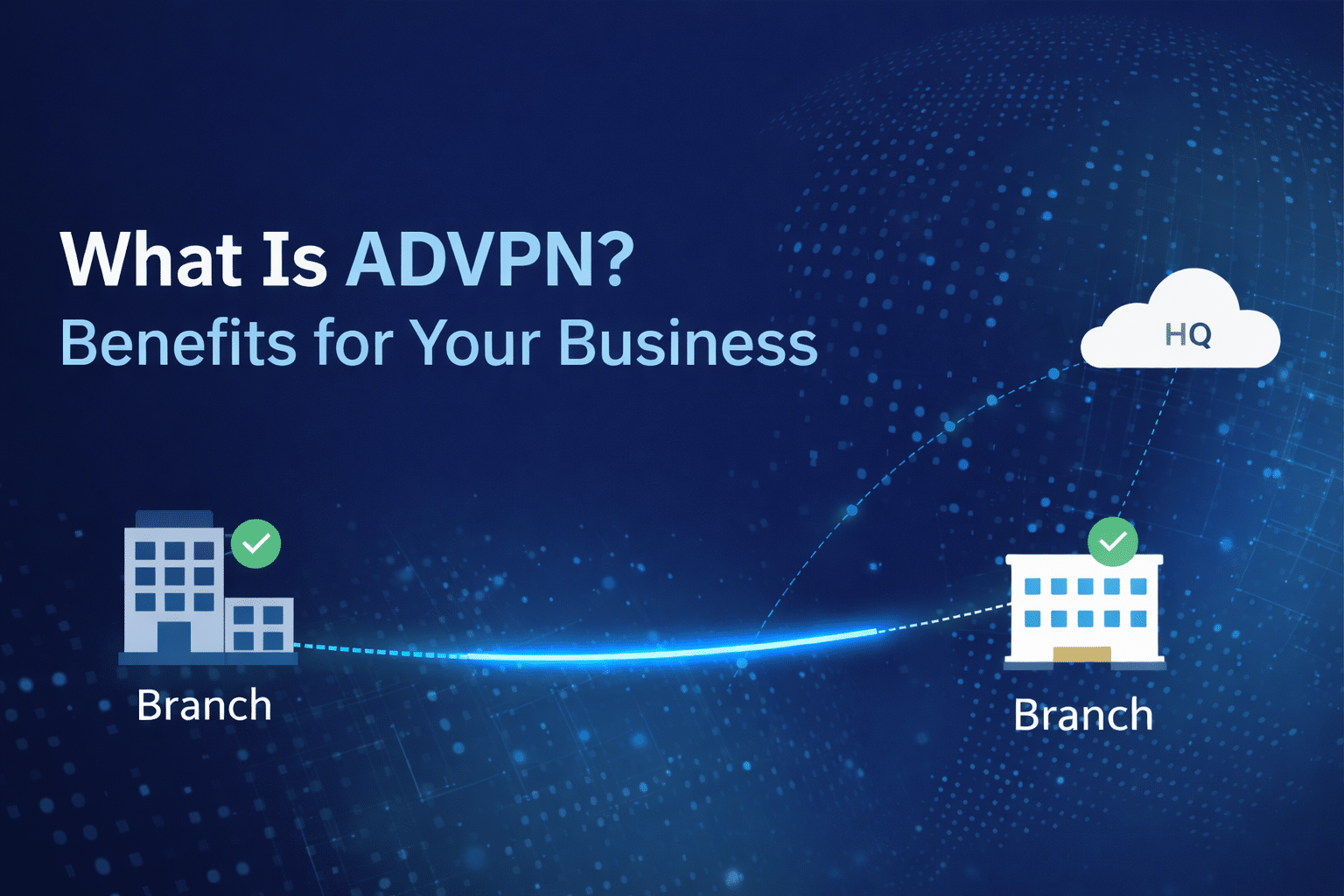

What this means for businesses (even if you don’t run an AI cluster)

Most organisations won’t deploy AI training fabrics. But the impact will reach everyone through:

-

Cloud services: hyperscalers will build more AI-focused fabrics

-

Future data centre designs: AI will influence architecture choices

-

Network security and segmentation: higher-speed east-west traffic increases the importance of visibility and control

-

Operational expectations: performance predictability becomes a KPI, not a nice-to-have

If your business relies on cloud AI services, data analytics, or large-scale processing even indirectly this evolution affects your cost, latency, and service quality.

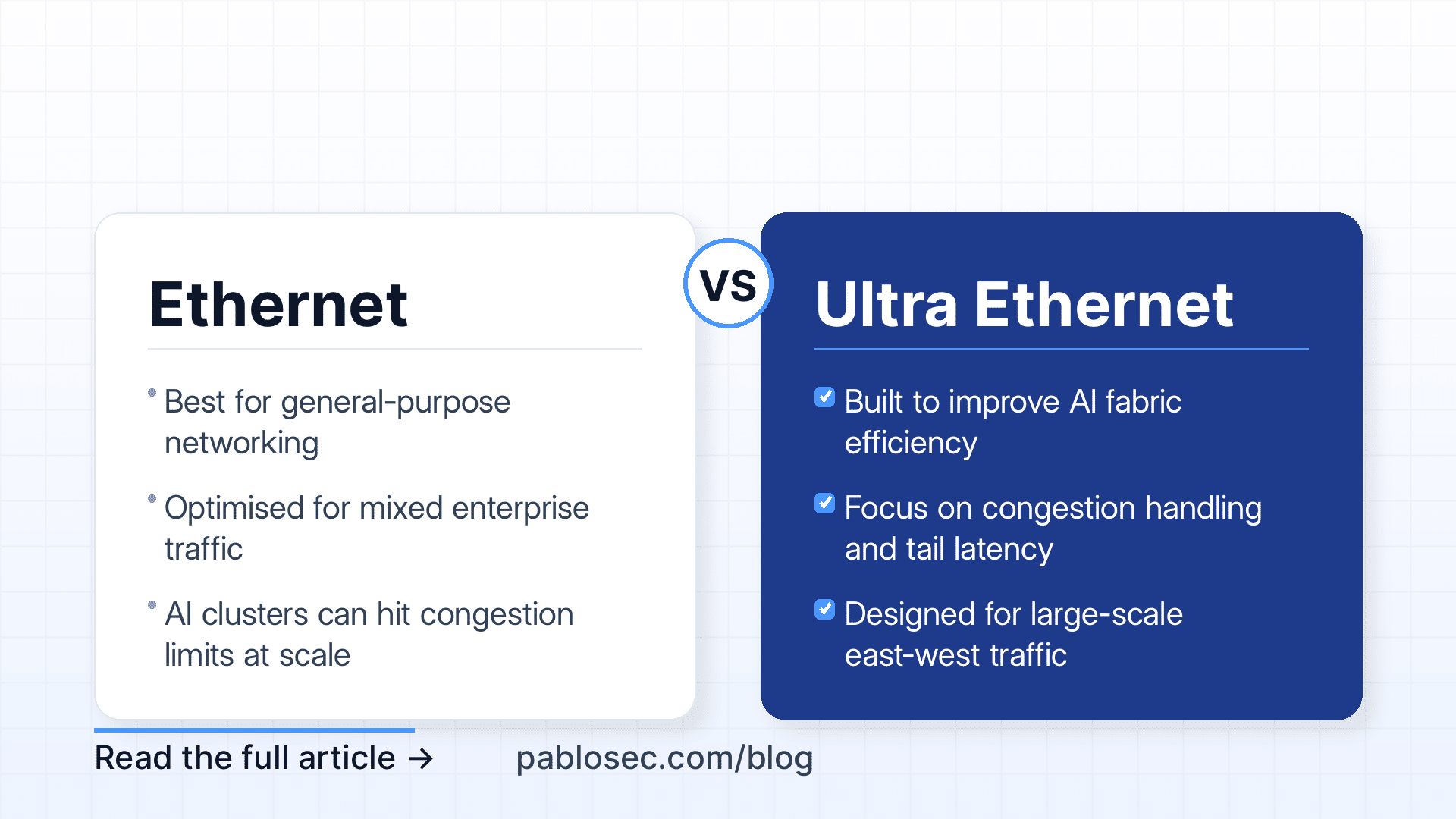

The security angle: faster networks, bigger blast radius

When east-west traffic grows, the network becomes a larger attack surface. High-performance fabrics must still deliver:

-

segmentation and micro-segmentation

-

monitoring and anomaly detection at high throughput

-

strong identity and access controls

-

resilient architecture that assumes failure

Performance without security is not progress. It’s just faster risk.

Final thought

Ultra Ethernet represents something bigger than another networking buzzword. It signals a shift: Ethernet is adapting to become the fabric of the AI era, not only the fabric of the traditional data centre.

It may not “replace” Ethernet in the way people imagine but it is very likely to define what Ethernet looks like in next-generation AI infrastructure.

You can learm more about in https://ultraethernet.org/ or dowloading the specification document on : https://ultraethernet.org/uec-1-0-spec