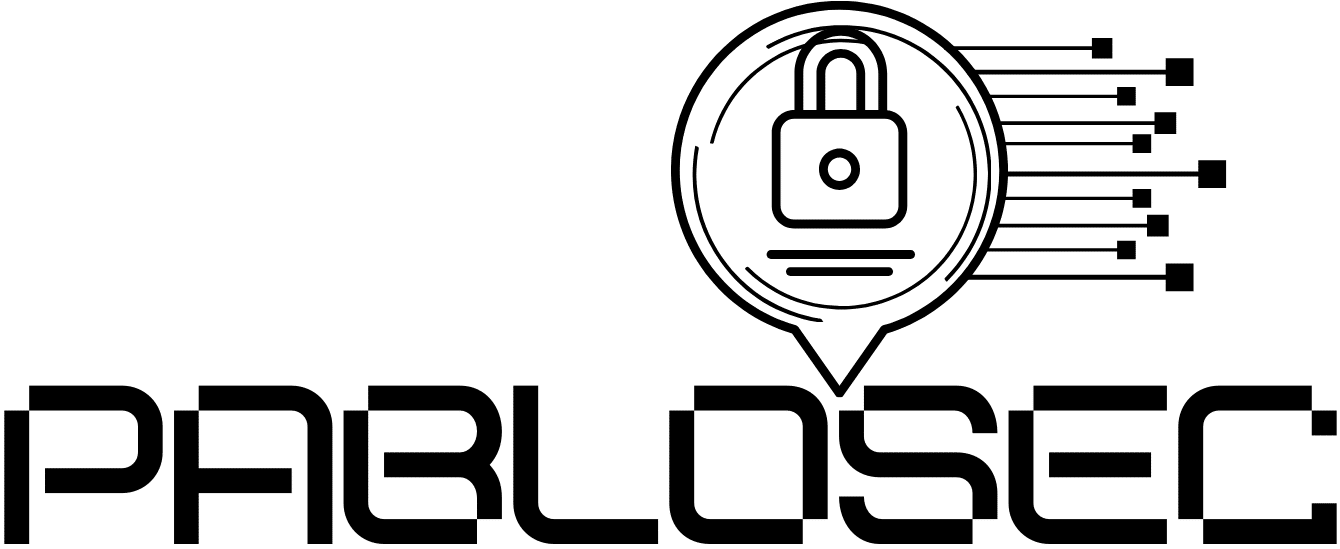

What changes, why it matters, and when you actually need them

Most organisations buy a firewall for security, not microseconds. But as networks evolve (AI traffic, east-west flows, low-latency trading platforms, real-time control systems, VoIP at scale), latency becomes a security requirement too because performance problems quickly turn into availability problems.

One place latency is often misunderstood: the firewall’s network interfaces.

You’ll see terms like ultra-low latency, high-performance ports, bypass NICs, cut-through switching, DPDK, SR-IOV, kernel bypass, or smartNIC/DPU. They’re all trying to solve the same core issue:

A standard interface is designed for general networking.

An ultra-low-latency interface is designed to minimise every step between “packet arrives” and “packet leaves.”

This article explains the difference in practical firewall terms.

First: where “firewall latency” really comes from

Latency isn’t only about the interface speed (1G/10G/25G/100G). It’s about the whole packet path:

- Ingress (NIC) → CPU/ASIC

- Classification (VLAN, routing, session lookup)

- Security inspection (policy, IPS/AV, TLS inspection, app control, etc.)

- Egress scheduling (queues, shaping, prioritisation)

- Transmit (NIC)

A “normal” interface is fine when the firewall is not stressed, traffic is predictable, and workloads are typical.

But under heavy load (or with latency-sensitive flows), small delays in interrupt handling, buffering, queueing, and memory copies can stack up.

What makes an interface “ultra-low latency” in a firewall context?

Ultra-low-latency designs focus on reducing:

1) Buffering and queueing delay

Standard NICs and drivers often buffer packets for efficiency. That’s great for throughput, but it can add jitter (latency variation).

Ultra-low-latency interfaces aim for:

-

Smaller, smarter buffers

-

Better queue control

-

Reduced jitter under load

2) CPU overhead (interrupts, context switches, memory copies)

Traditional packet processing often relies on:

-

Interrupts (CPU gets “poked” per packet or batch)

-

Kernel networking stack

-

Multiple memory copies

Low-latency NIC stacks often use:

-

Polling modes (reducing interrupt storms)

-

Kernel bypass frameworks (e.g., user-space packet I/O)

-

Zero-copy / fewer-copy paths

-

More predictable CPU scheduling

3) Deterministic behaviour under stress

The goal isn’t only “lower average latency.”

It’s less tail latency those rare but painful spikes that break real-time applications.

Standard interfaces: what they’re optimised for

Standard firewall interfaces are typically tuned for:

-

Compatibility (drivers, OS features, broad support)

-

Throughput per cost

-

General enterprise behaviour (bursty traffic, mixed workloads)

They’re a great choice when:

-

You’re securing office networks and internet edges

-

Your bottleneck is inspection features, not packet I/O

-

Latency sensitivity is “nice to have,” not mission-critical

Most networks should start here. If you’re not measuring a real latency problem, don’t pay for one.

Ultra-low-latency interfaces: what you gain (and what you trade)

You gain

-

Lower and more stable latency (especially under load)

-

Better handling of microbursts

-

More predictable performance for real-time flows

-

In some designs, fail-open capabilities (bypass) to preserve connectivity if the appliance fails

You trade

-

Cost (interfaces and platform are more expensive)

-

Potential feature constraints (some optimisations reduce flexibility)

-

More engineering effort (tuning queues, CPU pinning, bypass modes, etc.)

-

Operational maturity required (monitoring and testing matter more)

Firewall scenarios where ultra-low latency actually matters

If you want a simple filter, ask:

“Do I care about microseconds or only about milliseconds?”

You typically need ultra-low-latency interfaces if you have:

-

High-frequency or latency-sensitive transactions

(trading systems, low-latency payments, real-time bidding) -

Real-time voice/video at large scale

where jitter spikes create user-visible failures -

East-west data centre traffic with strict SLOs

especially when the firewall is in-line between compute clusters -

Industrial/OT environments

where control loops or SCADA protocols are sensitive to timing -

DDoS-heavy or packet-rate-heavy edges

where packet-per-second (pps) and tail latency are the battle

If your primary issues are:

-

“My firewall throughput drops when I enable TLS inspection,” or

-

“My ISP link is congested,”

…then the interface type is rarely the fix. That’s about sizing, policy design, and inspection strategy.

Don’t confuse “low latency ports” with “fast firewall”

This is the common mistake:

Buying ultra-low-latency interfaces doesn’t automatically make the firewall low latency.

If your firewall is doing deep inspection (IPS, AV, sandboxing, TLS decryption), the dominant delay may be inspection time, not the NIC.

Ultra-low-latency interfaces help most when:

-

Your policies are efficient, and

-

Your security design avoids unnecessary heavy inspection on latency-critical flows, and

-

The bottleneck is packet I/O consistency (pps, jitter, tail latency)

A practical approach: split traffic by sensitivity

A high-quality design often looks like this:

-

Latency-critical apps

routed through minimal, strict controls (tight allowlists, segmentation, identity controls) with careful inspection selection -

General business traffic

gets full NGFW inspection -

Bulk / background traffic

gets shaping and cost-aware routing

This is how you keep security strong without turning the firewall into a latency generator.

Quick checklist: should you consider ultra-low-latency interfaces?

Answer “yes” to 2+ and it’s worth a deeper look:

-

Do you have measured jitter/tail latency problems at the firewall?

-

Are you operating at very high pps (not just high bandwidth)?

-

Do microbursts cause drops or intermittent performance issues?

-

Are you protecting real-time services with strict latency SLOs?

-

Are you forced to keep the firewall in-line (no alternative architecture)?

If not, a well-sized firewall with standard interfaces, clean policies, and correct inspection design usually wins.

Final thought

Ultra-low-latency interfaces aren’t about “bigger numbers.” They’re about predictable packet handling when networks are pushed hard—and that’s increasingly relevant as AI-era traffic patterns and real-time services become the norm.

If you tell us your environment (internet edge vs DC, link speeds, expected pps, inspection requirements, and the apps that must stay smooth), we can recommend whether standard interfaces are enough or whether ultra-low-latency hardware and tuning would make a measurable difference.